CASE STUDY

Cloud-Enabled IoT Data Monitoring & Maintenance

IoT

Cloud

Data Engineering

Predictive Maintenance

Shwaira Solutions01 Oct, 2025

Written by Shwaira Solutions

2 October 2025 | 5 min read

A global enterprise faced significant challenges in managing large-scale data pipelines across its multiple systems. The core issues included:

We designed and implemented a modern, end-to-end data engineering solution to address these challenges:

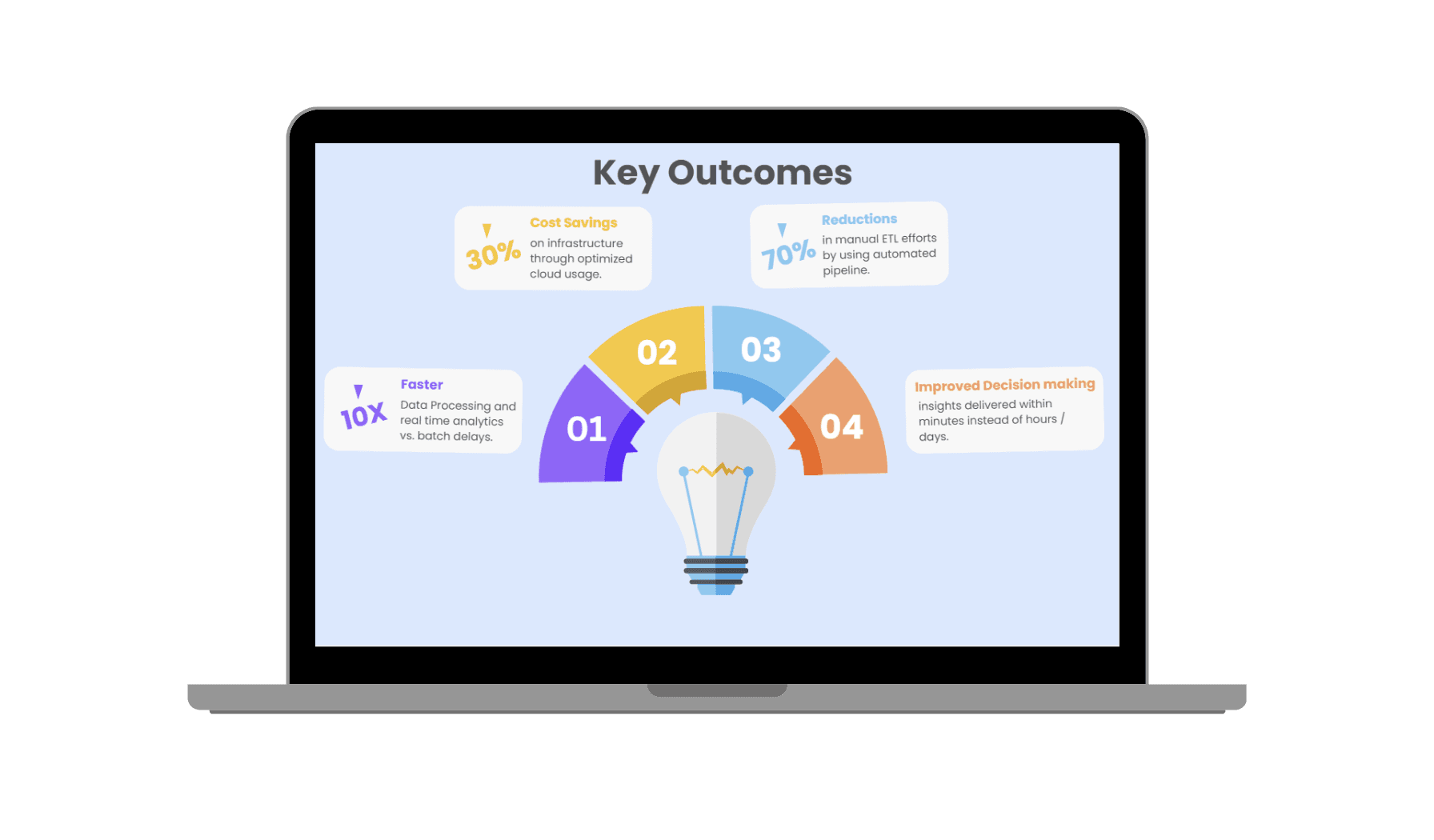

The new data platform delivered transformative results, fundamentally changing how the enterprise leverages its data.

Collaborate with experts to architect intelligent systems that bring your vision to life.